Back to: Robotics & Artificial Intelligence (Class IX)

Artificial Intelligence

Artificial Intelligence is composed of two words Artificial and Intelligence, where Artificial defines “man-made,” and intelligence defines “thinking power”, hence AI means “a man-made thinking power.”

“It is a branch of computer science by which we can create intelligent machines which can behave like a human, think like humans, and able to make decisions.”

Artificial Intelligence exists when a machine can have human based skills such as learning, reasoning, and solving problems

With Artificial Intelligence you do not need to preprogram a machine to do some work, despite that you can create a machine with programmed algorithms which can work with own intelligence, and that is the awesomeness of AI.

It is believed that AI is not a new technology, and some people says that as per Greek myth, there were Mechanical men in early days which can work and behave like humans.

AI Subset

Artificial Intelligence (AI) is a broad field encompassing several specialized areas, each focusing on different aspects of creating intelligent systems. Here are some of the key subsets of AI:

1. Machine Learning (ML)

When machine learn from its past experiences.

Supervised Learning: If a machine learns from its past experiences with guidance or oversight, it is said to be learning under supervision..

Unsupervised Learning: If a machine learns from its past experiences without any guidance or oversight, it is learning without supervision.

Reinforcement Learning: Algorithms learn by interacting with an environment and receiving feedback in the form of rewards or punishments.

2. Deep Learning (DL)

A specialized subset of ML that uses neural networks with many layers (deep neural networks) to analyze complex patterns in large amounts of data. DL applications are Image and speech recognition, natural language processing (NLP), autonomous vehicles, etc.

3. Natural Language Processing (NLP)

A subset of AI focused on enabling machines to understand, interpret, and generate human language. Applications: Chatbots, language translation, sentiment analysis, voice assistants, etc.

4. Computer Vision

A field of AI that enables machines to interpret and make decisions based on visual input from the world, such as images and videos. CV used in Facial recognition, medical image analysis, autonomous vehicles, and object detection.

5. Robotics

A branch of AI that deals with the design, construction, operation, and use of robots, often integrating ML, computer vision, and NLP. Applications are Manufacturing, healthcare (surgical robots), service robots, space exploration, etc.

6. Neural Networks

A set of algorithms modeled loosely after the human brain, designed to recognize patterns and interpret sensory data. Used in deep learning for image and speech recognition, recommendation systems, and more.

Each of these subsets contributes to the broader field of AI by focusing on specific aspects of intelligence, learning, perception, or decision-making.

Why Artificial Intelligence?

Before Learning about Artificial Intelligence, we should know that what is the importance of AI and why should we learn it. Following are some main reasons to learn about AI:

- With the help of AI, you can create such software or devices which can solve real-world problems very easily and with accuracy such as health issues, marketing, traffic issues, etc.

- With the help of AI, you can create your personal virtual Assistant, such as Cortana, Google Assistant, Siri, etc.

- With the help of AI, you can build such Robots which can work in an environment where survival of humans can be at risk.

- AI opens a path for other new technologies, new devices, and new Opportunities.

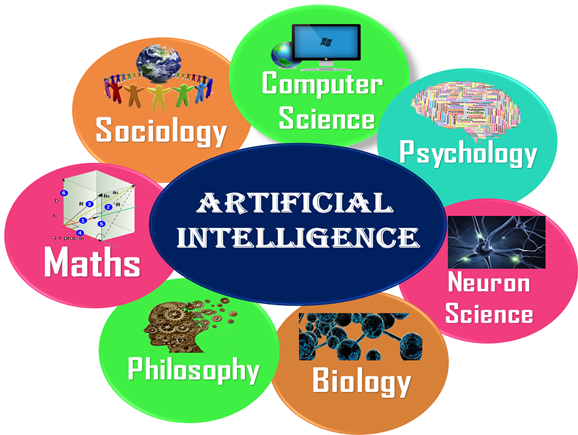

Artificial Intelligence is not just a part of computer science even it’s so vast and requires lots of other factors which can contribute to it. To create the AI first we should know that how intelligence is composed, so the Intelligence is an intangible part of our brain which is a combination of Reasoning, learning, problem-solving perception, language understanding, etc.

To achieve the above factors for a machine or software Artificial Intelligence requires the following discipline:

- Mathematics

- Biology

- Psychology

- Sociology

- Computer Science

- Neurons Study

- Statistics

History of AI

Here is a brief account of the history of Artificial Intelligence (AI) since John McCarthy first coined the term in 1956:

1956: Birth of AI

- John McCarthy, a computer scientist, coined the term “Artificial Intelligence” during the Dartmouth Conference in 1956. This event is considered the official beginning of AI as a field of study. The goal was to explore ways to make machines simulate human intelligence.

1950s – 1960s: Early Enthusiasm and Foundational Research

- Early AI research focused on problem-solving and symbolic methods. Programs like Logic Theorist (1955) and General Problem Solver (1957) were developed to mimic human reasoning.

- ELIZA (1966), an early natural language processing program, demonstrated simple conversation capabilities.

1970s: AI Winter and Realization of Challenges

- The initial optimism faced a reality check when the limitations of AI became clear. Computers lacked processing power, and AI programs struggled with complexity.

- This period, known as the first AI winter, saw reduced funding and interest due to slow progress.

1980s: Revival with Expert Systems

- AI experienced a resurgence with the development of expert systems, which used rules to mimic human decision-making in specific domains (e.g., medical diagnosis).

- Commercial interest grew, and AI applications began to appear in business and industry.

1990s: Machine Learning and Real-World Applications

- AI shifted focus from symbolic reasoning to machine learning—using data to train algorithms.

- Successes like IBM’s Deep Blue beating world chess champion Garry Kasparov in 1997 showcased AI’s potential in specialized tasks.

2000s: Data-Driven AI and Breakthroughs in Learning

- The rise of the internet and increased data availability led to advances in data-driven AI. Machine learning algorithms, especially neural networks, became more effective.

- Technologies like speech recognition (e.g., Apple’s Siri) and computer vision showed practical AI applications in everyday life.

2010s: Deep Learning Revolution

- The development of deep learning (a subset of machine learning using deep neural networks) revolutionized AI, leading to breakthroughs in image recognition, natural language processing, and autonomous systems.

- AI-powered products like self-driving cars, virtual assistants, and recommendation systems became more common.

2020s: AI in Every Domain

- AI continues to evolve rapidly, with applications in healthcare, finance, robotics, and more.

- Areas like reinforcement learning, generative AI, and ethical AI research are expanding.

- AI systems like GPT-3 and ChatGPT demonstrated advanced language understanding and generation, showcasing the power of modern AI models.

Today: AI’s Growing Impact

- AI is integrated into numerous fields, from autonomous vehicles to personalized medicine.

- Research focuses on creating more general AI (Artificial General Intelligence or AGI) and ensuring ethical and safe use of AI technologies.

This journey from its inception in 1956 to today illustrates AI’s remarkable evolution and its profound impact on technology and society.

AI Life-Cycle

1. Gathering Data

Collecting all the information (data) that you need to train your machine learning model. The quality and quantity of the data you gather directly impact how well your model will learn and perform. If the data is insufficient or irrelevant, the model may not work correctly. Ex: If you want to build a model that predicts house prices, you gather data about houses, such as size, number of rooms, location, and past sale prices.

2. Data Preparation

Cleaning and organizing the collected data to make it suitable for analysis. This involves handling missing data, removing duplicates, and correcting errors. Raw data is often messy and inconsistent. Preparing it ensures the model learns from accurate and relevant data, which improves its performance. Ex: If your house price data has missing values for some houses’ sizes, you might remove those records or fill in the missing data with an average value.

3. Data Wrangling

Transforming and structuring the data into a usable format. This includes filtering data, transforming data types, creating new features, and selecting the most relevant features. Wrangling makes the data ready for machine learning algorithms by ensuring it is in the correct format and contains useful information. Ex: Converting a “date of sale” field into separate features like “year of sale” or “season of sale” to capture more information.

4. Analyze Data

Understanding the data through exploratory data analysis (EDA). This involves visualizing data, finding patterns, correlations, and summarizing key statistics. Analyzing data helps you understand its characteristics, detect outliers, and gain insights, which can guide the selection of the right model or approach. Ex: Using graphs or charts to see if house prices are more closely related to the size of the house or its location.

5. Train the Model

Feeding the prepared data into a machine learning algorithm to teach it to recognize patterns or make predictions. Training allows the model to learn from the data by finding patterns, relationships, or rules that can be used to make predictions on new data. Ex: Using a machine learning algorithm like linear regression to find the relationship between house features (like size or location) and their prices.

6. Test the Model

Evaluating the trained model’s performance using a separate set of data (test data) that the model has never seen before. Testing helps you see how well the model generalizes to new, unseen data and ensures it is accurate and reliable. Ex: Checking if the house price predictions made by your model are close to actual prices using a new set of house data.

7. Deployment

Putting the trained and tested model into a real-world environment where it can make predictions or decisions based on new data. Deployment allows the model to be used by end-users or integrated into applications to solve real problems. Ex: Integrating the house price prediction model into a website where users can enter house details and get an estimated price.

Turing Test: Definition, Use, and Importance

What is the Turing Test?

The Turing Test is a test of a machine’s ability to exhibit intelligent behavior identical from that of a human. It was proposed by the British mathematician and computer scientist Alan Turing in his 1950 paper “Computing Machinery and Intelligence.”

How the Turing Test Works:

- In the Turing Test, a human evaluator interacts with two unseen participants: a human and a machine (typically through text-based communication).

- The evaluator asks questions and receives answers from both the human and the machine.

- If the evaluator cannot reliably tell which participant is the machine and which is the human, the machine is said to have passed the Turing Test.

Use of the Turing Test:

- The Turing Test is used as a measure of a machine’s ability to demonstrate human-like intelligence and behavior.

- It has been a guiding concept in AI research, especially in developing conversational agents, chatbots, and natural language processing (NLP) systems.

- It provides a practical benchmark to evaluate the performance of AI systems in generating human-like responses.

Importance of the Turing Test:

- Conceptual Benchmark for AI:

- The Turing Test has served as a conceptual goal for AI research, encouraging the development of machines that can think, understand, and respond like humans.

- Stimulates Research in NLP:

- It has driven advances in natural language processing, where AI systems must understand and generate human language to pass the test.

- Encourages Debate on AI and Intelligence:

- The Turing Test has sparked important discussions on what constitutes “intelligence” and whether machine intelligence can truly replicate or surpass human intelligence.

- Evaluates Machine Learning Models:

- It helps in evaluating machine learning models that aim to simulate human conversation and behavior, such as chatbots and virtual assistants.

- Ethical and Philosophical Implications:

- The test has philosophical significance, raising questions about consciousness, mind, and what it means to be human.

Limitations of the Turing Test:

- Narrow Focus: It primarily tests a machine’s ability to mimic human conversation, not necessarily its understanding or reasoning capabilities.

- Misleading Results: A machine could pass the Turing Test by using tricks or programmed responses without genuinely understanding the conversation.

- Ignores Other Aspects of Intelligence: The Turing Test does not measure other forms of intelligence, such as emotional or social intelligence, problem-solving, or creativity.

Advantages of Artificial Intelligence

Following are some main advantages of Artificial Intelligence:

- High Accuracy with less errors: AI machines or systems are prone to less errors and high accuracy as it takes decisions as per pre-experience or information.

- High-Speed: AI systems can be of very high-speed and fast-decision making, because of that AI systems can beat a chess champion in the Chess game.

- High reliability: AI machines are highly reliable and can perform the same action multiple times with high accuracy.

- Useful for risky areas: AI machines can be helpful in situations such as defusing a bomb, exploring the ocean floor, where to employ a human can be risky.

- Digital Assistant: AI can be very useful to provide digital assistant to the users such as AI technology is currently used by various E-commerce websites to show the products as per customer requirement.

- Useful as a public utility: AI can be very useful for public utilities such as a self-driving car which can make our journey safer and hassle-free, facial recognition for security purpose, Natural language processing to communicate with the human in human-language, etc.

Disadvantages of Artificial Intelligence

Every technology has some disadvantages, and the same goes for Artificial intelligence. Being so advantageous technology still, it has some disadvantages which we need to keep in our mind while creating an AI system. Following are the disadvantages of AI:

- High Cost: The hardware and software requirement of AI is very costly as it requires lots of maintenance to meet current world requirements.

- Can’t think out of the box: Even we are making smarter machines with AI, but still they cannot work out of the box, as the robot will only do that work for which they are trained, or programmed.

- No feelings and emotions: AI machines can be an outstanding performer, but still it does not have the feeling so it cannot make any kind of emotional attachment with human, and may sometime be harmful for users if the proper care is not taken.

- Increase dependency on machines: With the increment of technology, people are getting more dependent on devices and hence they are losing their mental capabilities.

- No Original Creativity: As humans are so creative and can imagine some new ideas but still AI machines cannot beat this power of human intelligence and cannot be creative and imaginative.

Application of AI

Artificial Intelligence has various applications in today’s society. It is becoming essential for today’s time because it can solve complex problems with an efficient way in multiple industries, such as Healthcare, entertainment, finance, education, etc. AI is making our daily life more comfortable and fast.

Following are some sectors which have the application of Artificial Intelligence:

1. AI in Astronomy

- Artificial Intelligence can be very useful to solve complex universe problems. AI technology can be helpful for understanding the universe such as how it works, origin, etc.

2. AI in Healthcare

- In the last, five to ten years, AI becoming more advantageous for the healthcare industry and going to have a significant impact on this industry.

- Healthcare Industries are applying AI to make a better and faster diagnosis than humans. AI can help doctors with diagnoses and can inform when patients are worsening so that medical help can reach to the patient before hospitalization.

3. AI in Gaming

- AI can be used for gaming purpose. The AI machines can play strategic games like chess, where the machine needs to think of a large number of possible places.

4. AI in Finance

- AI and finance industries are the best matches for each other. The finance industry is implementing automation, chatbot, adaptive intelligence, algorithm trading, and machine learning into financial processes.

5. AI in Data Security

- The security of data is crucial for every company and cyber-attacks are growing very rapidly in the digital world. AI can be used to make your data more safe and secure. Some examples such as AEG bot, AI2 Platform,are used to determine software bug and cyber-attacks in a better way.

6. AI in Social Media

- Social Media sites such as Facebook, Twitter, and Snapchat contain billions of user profiles, which need to be stored and managed in a very efficient way. AI can organize and manage massive amounts of data. AI can analyze lots of data to identify the latest trends, hashtag, and requirement of different users.

7. AI in Travel & Transport

- AI is becoming highly demanding for travel industries. AI is capable of doing various travel related works such as from making travel arrangement to suggesting the hotels, flights, and best routes to the customers. Travel industries are using AI-powered chatbots which can make human-like interaction with customers for better and fast response.

8. AI in Automotive Industry

- Some Automotive industries are using AI to provide virtual assistant to their user for better performance. Such as Tesla has introduced TeslaBot, an intelligent virtual assistant.

- Various Industries are currently working for developing self-driven cars which can make your journey more safe and secure.

9. AI in Robotics:

- Artificial Intelligence has a remarkable role in Robotics. Usually, general robots are programmed such that they can perform some repetitive task, but with the help of AI, we can create intelligent robots which can perform tasks with their own experiences without pre-programmed.

- Humanoid Robots are best examples for AI in robotics, recently the intelligent Humanoid robot named as Erica and Sophia has been developed which can talk and behave like humans.

10. AI in Entertainment

- We are currently using some AI based applications in our daily life with some entertainment services such as Netflix or Amazon. With the help of ML/AI algorithms, these services show the recommendations for programs or shows.

11. AI in Agriculture

- Agriculture is an area which requires various resources, labor, money, and time for best result. Now a day’s agriculture is becoming digital, and AI is emerging in this field. Agriculture is applying AI as agriculture robotics, solid and crop monitoring, predictive analysis. AI in agriculture can be very helpful for farmers.

12. AI in E-commerce

- AI is providing a competitive edge to the e-commerce industry, and it is becoming more demanding in the e-commerce business. AI is helping shoppers to discover associated products with recommended size, color, or even brand.

13. AI in education:

- AI can automate grading so that the tutor can have more time to teach. AI chatbot can communicate with students as a teaching assistant.

- AI in the future can be work as a personal virtual tutor for students, which will be accessible easily at any time and any place.

Ethics in Artificial Intelligence

Ethics in Artificial Intelligence (AI) involves ensuring that AI systems are designed and used in ways that are fair, transparent, and beneficial to society. Ethical considerations are crucial to prevent AI from causing harm, perpetuating bias, or being used irresponsibly. Below are some key aspects of AI ethics:

1. Bias:

Bias in AI occurs when an AI system produces results that are systematically prejudiced due to biased data or algorithms. Ex: An AI model trained on biased data (e.g., data that over-represents certain groups) may favor those groups and discriminate against others in areas like hiring, lending, or law enforcement.

Importance: Reducing bias is critical to ensure fairness and prevent discrimination.

2. Prejudice:

Prejudice in AI refers to unfair preconceived notions or stereotypes built into the AI system, either intentionally or unintentionally. Ex: An AI that predicts criminal behavior based on biased crime data may unfairly target certain communities.

Importance: Addressing prejudice is essential to ensure AI does not reinforce harmful stereotypes or inequalities.

3. Fairness:

Fairness in AI means treating all individuals or groups equitably, without favoritism or discrimination. Ex: Ensuring that a facial recognition system works equally well for all races and genders, rather than performing better for one group.

Importance: Fairness is necessary to build trust in AI systems and promote social justice.

4. Accountability:

Accountability in AI involves ensuring that there are clear responsibilities for the actions and decisions made by AI systems. Ex: If an AI system causes harm (e.g., an autonomous vehicle accident), it should be clear who is responsible—the developers, the users, or another entity

Importance: Accountability ensures that those who build and deploy AI systems are held responsible for their impact on society.

5. Transparency:

Transparency means making the workings of an AI system understandable to users and stakeholders, including how decisions are made. Ex: Clearly explaining how a loan approval AI system makes its decisions to applicants.

Importance: Transparency helps build trust in AI systems and allows for scrutiny to prevent misuse.

6. Interpretability:

Interpretability refers to the ability to understand how an AI model arrives at a specific decision or output. Ex: Being able to explain why a medical diagnosis AI system recommended a particular treatment.

Importance: Interpretability is essential for debugging AI systems, ensuring their accuracy, and making them understandable to humans.

7. Explainability:

Explainability is the degree to which an AI system’s decision-making process can be understood by humans, particularly non-experts. Ex: Providing clear, user-friendly explanations for why an AI-powered credit score system declined a loan application.

Importance: Explainability helps users and stakeholders understand, trust, and effectively interact with AI systems..